As part of my project to emulate an IBM PC or XT with cycle accuracy, I need to be able to get the machine into a completely known and consistent state, which I call lockstep. That way I can run a program many times and be sure of getting exactly the same result each time.

This is a bit tricky, because while all the PC's clocks are derived from a single 14.318MHz crystal, they divide it in different ways. The CPU clock is made by dividing this frequency by 3, the PIT clock is made by dividing it by 4 and the CGA clock is made by dividing it by 16.

Getting the CPU clock in lockstep with the CGA clock is the difficult bit, since the CGA clock is in lockstep with the PIT clock by definition (assuming that such a lockstep is possible - I'm not sure offhand if the phase relationship between the PIT and the CGA clock is always the same or if it's randomized on startup - the latter would make it more complicated to use the PIT in lockstep mode, but that's not really a big problem since the point of lockstep mode is to be able to do timing statically).

Since the CGA clock and the CPU clock have periods which are relatively prime numbers of hdots, it's definitely possible to get them into lockstep. Once I had a rough idea of what the CGA wait states were, I realized that achieving lockstep ought to be possible with a combination of delays and CGA accesses. The algorithm would be:

- Do a CGA memory access, reducing the number of possible relative phases from 16 to 3.

- Delay for A ccycles.

- Do a CGA memory access, reducing the number of possible relative phases from 3 to 2.

- Delay for B ccycles.

- Do a CGA memory access, reducing the number of possible relative phases from 2 to 1.

Delaying for 16 ccycles gives the same relative phases as delaying for 0 ccycles, so the problem boils down to finding A mod 16 and B mod 16. That's only 256 possibilities (and probably quite a few of those will work) so trial and error works fine. Delaying for particular numbers of cycles is okay too - the 8-bit MUL instruction takes 69 ccycles plus 1 ccycle for each set bit in AL, so as long as you don't mind waiting for 69 ccycles you can get a delay of any number of ccycles you like.

But there's a more fundamental problem - how do we recognize when we've succeeded? The definition of lockstep involves consistency - ending up in a known end state no matter what the initial state. So in order to determine whether we're in lockstep or not, we really need to be able to control the initial state - in other words, in order to figure out whether we're in lockstep or not we first need to be in lockstep! That's a bit of a chicken-and-egg problem.

If I knew exactly what the CGA wait states were at this stage I could have figured out the right A and B values on purely theoretical principles, but I didn't - my examinations of the CGA schematics left some questions (particularly in areas involving how the 8088 treats READY signals occuring at different clock phases, and how some apparent race conditions actually turn out in real hardware). It was only in the course of achieving lockstep that I discovered what the CGA wait states actually are.

I had a few false starts involving identifying the 6 behavior classes for the 27 possible transition tables involved in the long-term behaviors of repeated sections of code. For example, if a piece of repeated code has 3 possible long-term behaviors depending on the relative phase at the start, I know that the repeated section must leave the relative phase alone.

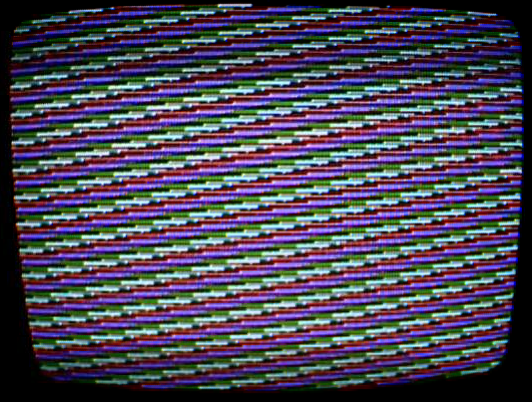

But that was getting rather complicated, and I wasn't really getting anywhere until I hit on a better way - I realized that I could visualize exactly how long a piece of code was taking by running it and then changing the CGA palette register, which has an immediate effect, so marks the position on the screen where the electron beam was pointing when the register changed.

That's only useful if the transition happens in exactly the same place on the screen in each frame (otherwise you don't get a stable image and can't see what's going on). Which sounds like we're back to the chicken-and-egg problem again. But it's a more limited kind of lockstep that we need for this particular experiment - we don't need absolute lockstep, just a way of getting code to run at a consistent place relative to the raster beam, from frame to frame. That is to say, it doesn't matter if next time we run the program the image appears in a different place on the screen.

Fortunately, there's a way to do this on the PC even if we don't have full lockstep, since we can use the PIT to introduce a lockstep that's just consistent from frame-to-frame, not from run-to-run. If we set a timer to go off exactly 262*912/12 = 19912 PIT cycles, it'll occur exactly once every frame. That's not quite enough though, because interrupts don't quite have an instantaneous effect on the CPU - the CPU does finish whatever instruction it's currently executing before starting an interrupt. So you have to make sure it's not executing any instructions - i.e. is in the halt state. Another complication is that I had to disable all other interrupts and the DRAM refresh in order to avoid them messing up the timings, which meant that I had to access each DRAM row within a certain period of time lest the capacitors discharge and the memory contents decay, which meant that I couldn't leave the CPU halted for too long!

Once I had a stable image I was able to generate the 16 different CGA/CPU relative phases with multiply instructions, and made a diagonal line that advanced 3 hdots (1 ccycle) on each line just by cycle counting. Then by placing CGA memory accesses between this diagonal line and the palette write I was able to see exactly what the CGA wait states were:

It look a while to get this image because whenever I added some code to a line I had to change the delay code at the end of the line to get the start of the next line to line up correctly, so there was a fair amount of trial and error involved.

In this image, every other scanline displays (just using normal 640-pixel graphics mode) a pattern that repeats every 16 pixels, so that I can see where the lchar boundaries are.

Once I had the CGA wait states, getting CGA/CPU lockstep was relatively easy. Here's a photo I took when I finally got it:

Note that of the lower 16 black horizontal lines, they all end at the same position mod 48 hdots (you'll have to take my word that before the lockstep code they were at 16 different relative phases, like the lines further up which make a diagonal pattern).

Phew, that's a lot of complications for such a tiny piece of code!

Tomorrow we'll look at how to get the CRTC in lockstep as well.