My post yesterday kind of devolved a bit into ranting about how Microsoft's processes have the unindented consequence of causing the code to increase in complexity in the long term. This begs the question "how could Microsoft change to avoid this?"

Back in the 90s, Microsoft was generally considered to be an excellent place to work (much as Google is now). They still are to some extent but today that "excellent" means more "sensible" than "exciting". In the 90s, Microsoft was often described at having a culture that resembled a collection of many small startup companies rather than one large corporation. I think that was pretty much gone by the time I started working there in 2001 - by then it was very corporate (sometimes embarrasingly so). Meanwhile, startup companies are doing the kinds of development that Microsoft can only dream about, on tiny budgets.

Reading Paul Graham's essays about startups has given me some hints about how these startups can be so effective in the way that Microsoft can't, as well as why the ones that fail do.

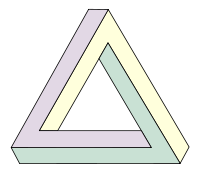

There is a whole spectrum of corporate cultures, from two or three guys in an apartment at one end (let's call it the left end for the sake of argument) to Microsoft at the other (the right end). The left (when successful) is great at motivating their employees to do great things, rapidly prototyping and turning over problems quickly. The right is great at (eventually) producing very large, complicated, highly polished pieces of software, being consistent and avoiding risk.

To really be successful you need to work at both ends of the spectrum (and everywhere in between). The ecosystem of people founding startups, writing some great software and then getting bought by Microsoft (or Yahoo, Google, whoever) has done some pretty great things. But does Microsoft need all those startups to exist externally to itself in order to do the startup-y things? Perhaps not, if it can return to the culture it had in the 90s.

Startups need only a couple of things to exist - talented people and money. Both of these Microsoft has in spades. But they also need these people to be very motivated. Microsoft isn't a great motivator of people anymore - the difference in rewards between those who are putting in a reasonable minimum effort and those who are going all out is (in the grand scheme of things) small and it takes a long time of working very consistently hard to rise above the crowd. The average employee knows that it is very unlikely that they will ever be able to make a big difference to anything. Certain philosophical positions adopted by Microsoft mean that they are no longer highly regarded by the geeks that they need the most.

One thing Microsoft can do to revitalize itself would be to incubate startups inside itself. Allow employees to form small teams to solve some specific problem. If they succeed, they are rewarded with lots of money. Even if this approaches the amount that they might get from a successful startup this would probably be much cheaper for Microsoft than buying the equivalent startup, assuming a reasonably high success rate. These internal startups could even compete (especially if there are multiple approaches that seem promising).

These startups should be autonomous for the most part - they should be able to choose the software and programming languages that makes the most sense for them. They should not have to coordinate with other teams who might be doing similar things, or worry about treading on the toes of others. They should be allowed to concentrate on writing great software. Unlike startups in the real world, they won't have to worry about getting funding (just getting a first version working in the agreed upon time). They can have office space on campus or work in somebody's apartment if they prefer. If Paul Graham is to be believed, these factors will cause the success rates of these internal startups to be much greater than those of startups in the real world.

While the resulting software might not meet Microsoft's standards for security, localization etc., these things are probably better done at the more corporate end of the spectrum, by those employees who prefer the stability and "sensible"ness that you get with that corporate culture. The left will quickly produce imaginative, highly competitive software and the right will polish it to corporate standards - the best of both worlds.

The downside for Microsoft of this approach would be the drain of talent from the "classic" product units, and possibly also from the company altogether (if successful "startup" employees were rewarded with what Neil Stephenson describes as something rhyming with "luck you money"). But if that drain is happening already, maybe there's nothing to lose.