I implemented my cleartype for images idea and here are the results.

![]()

![]()

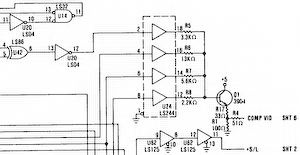

(This image, by the way, is part of the schematic of the original IBM CGA card - specifically, the composite DAC stage which I've mentioned before.)

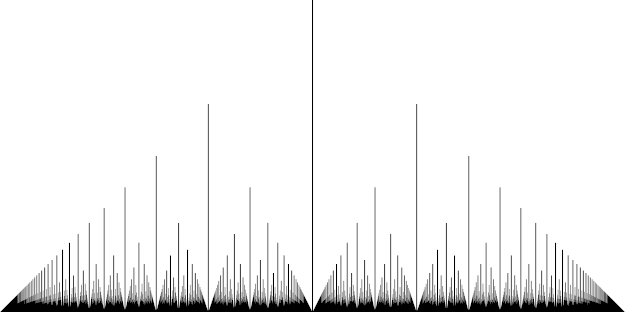

The first image was resampled by Paint Shop Pro 4.12 (I know it's ancient, but I never got on with any of the newer versions). This resampling algorithm works directly on the sRGB values (it's not gamma corrected) so whenever there's a grey pixel in the output image, it's too dark.

The second image was resampled using proper sRGB<->linear conversion and an ideal sinc filter (which means it's very slow - several seconds per image). If you zoom into this, you'll notice some "ripples" around the horizontal and vertical lines which are due to the band-limited resampling - getting rid of them would require higher spatial frequenices than this image can accurately reproduce, so really the image is about as correct as it can be. Because the ripples are clipped on the high side, this does make the image slightly darker on average than it should be, but the difference isn't noticable to the human eye).

The third image was resampled by the "cleartype for images" algorithm (again with a sinc filter in linear space) using a band-limit of the subpixel resolution. As you can see, it's noticably sharper but does have annoying "fringes" of colour as thin lines move from one subpixel to another.

The fourth image is the same as the third except that the band-limit is that of the pixel resolution rather than that of the subpixel resolution (but the filters are still centered on the subpixels for the corresponding channels). This completely eliminates the fringing (yay!). As expected, it's not actually sharper than the non-cleartype image but some of the near vertical lines are smoother and the text is much more legible than any of the other versions.

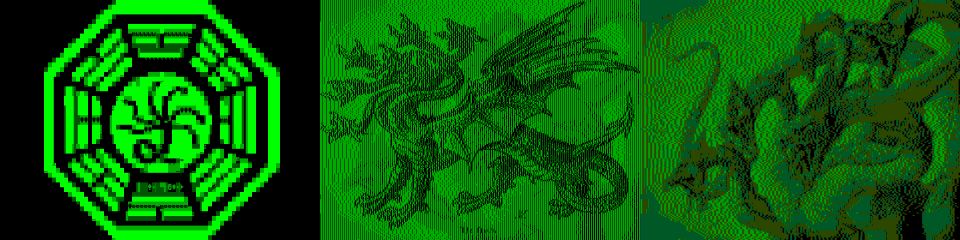

Here's the same four methods with a colour image:

![]()

![]()

First let's look at the one with incorrect gamma - notice that a lot of the sharpness in the detailed regions is gone.

The second image is much better - the details remain sharp.

The third image is a mess - details exhibit aliasing problems because when the image has detail in just one channel, the band-limit frequency is three times the pixel frequency.

The fourth image is pretty similar to the second but there are a few places where sharp, near-vertical lines are a bit smoother, for example the brightly lit part on the left of the hat.

So, I think this experiment is a success. The downside is that I now need to go back through all my previous blog posts and resample the images again to improve them.