There seem to be lots of people on the internet who don't really understand evolution. You can tell who they are because they ask things like "If people evolved from monkeys, why are there still monkeys?" and claim that there are things called microevolution and macroevolution which are qualitatively different. Sometimes this happens because people perceive there to be a conflict between their preconceived beliefs and the theory of evolution. Sometimes though it's just that they're taught by people who have such perceptions, which is a bit of a tragedy because a poor quality of teaching will lead to these students not being able to contribute to science in the future.

Without pretending to have any answers for the more fundamental problem of bad teaching, I do have an idea which could help people get an intuitive understanding of how evolution works.

Really what evolution says is that there ultimately there is a single family tree - we're all related if you go back far enough. Also, the blueprints for our bodies are not engraved in some unalterable stone tablet sealed in a cryogenic vacuum somewhere - they're stored as atomic-scale patterns inside our warm, wet cells and therefore change from time to time (albeit very slowly by the timescales we're used to).

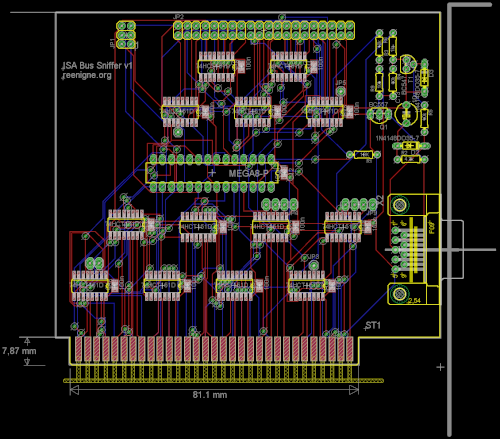

So what I'm imagining is a phylogenetic tree viewer with a twist - you can zoom into a phylogenetic tree, and if you zoom in far enough you can see individuals and the parent-child relationships between them, like a normal family tree. Individuals would actually be shown as what typical individual of that lineage would have looked like (possibly with typical random variations). But these pictures would be stored in some kind of vector representation so that one could be morphed into another gradually. The point is that no matter where you zoom in, there's no place you can find where the children don't resemble their parents. You'd also be able to get an intuitive understanding of the process of speciation by seeing how a single species lineage splits into two, but on the level of individuals there's no one individual that you can point to that marks the point at which the species diverge.

By zooming in right at the top of the tree you'd be able to see (a theory of) abiogenesis (the first point at which information was passed on from parent to child).

A simple version of this program (just including a tiny minority of species) would be relatively easy to create and could still fulfill the basic educational possibilities. But the biologists collaborate and the more data that the program was fed, the more interesting things could be done with the result. Ideally it would be made as accurate as possible according to current best theories. Of course, a huge amount of the data is currently unknown and would have to be synthesized (at high zoom-in levels, almost all of the details would need to be made up). So if the program is used for scientific purposes it would be good to have some way to visualize which aspects of the image are accurate according to current best theories and which aspects were synthesized.

The data that's added to the program need not all be biological in nature - people could add their own family trees (and even those of their pets) as well (though reconciling low-level actual data with synthesized data could be tricky).